Posts by Collection

experience

Computation Theory and Algorithms Laboratory, Institute of Information Science.

Global Routing Team, Design Group.

Computer Vision Team & Drone Team.

Image & Sensing Laboratory.

Neural Network Team, Advanced Creativity.

portfolio

Moment (IoT Product Prototype)

In this project, we gathered both students from engineering and designing background to innovate interesting IOT products. We used several tags imbedded on furniture in different houses and design an algorithm to read the communication between the tags. Our goal is trying to create a stress-free relationship between people. Finally, this product was nominated in I Design Award, Taiwan.

Deep Routing Congestion Estimator

In modern physical synthesis of VLSI circuits, routability has raised as a primary concern. So far, we have notice that the correlation between global routing (GR) estimation and detail routing (DR) is an essential issue when we want to make a design routable. In this project, We constructed a neural networks to apply supervised learning on congestion estimation. The model can predict the actual congestion and DRC in global routing stage or even embed into placement stage.

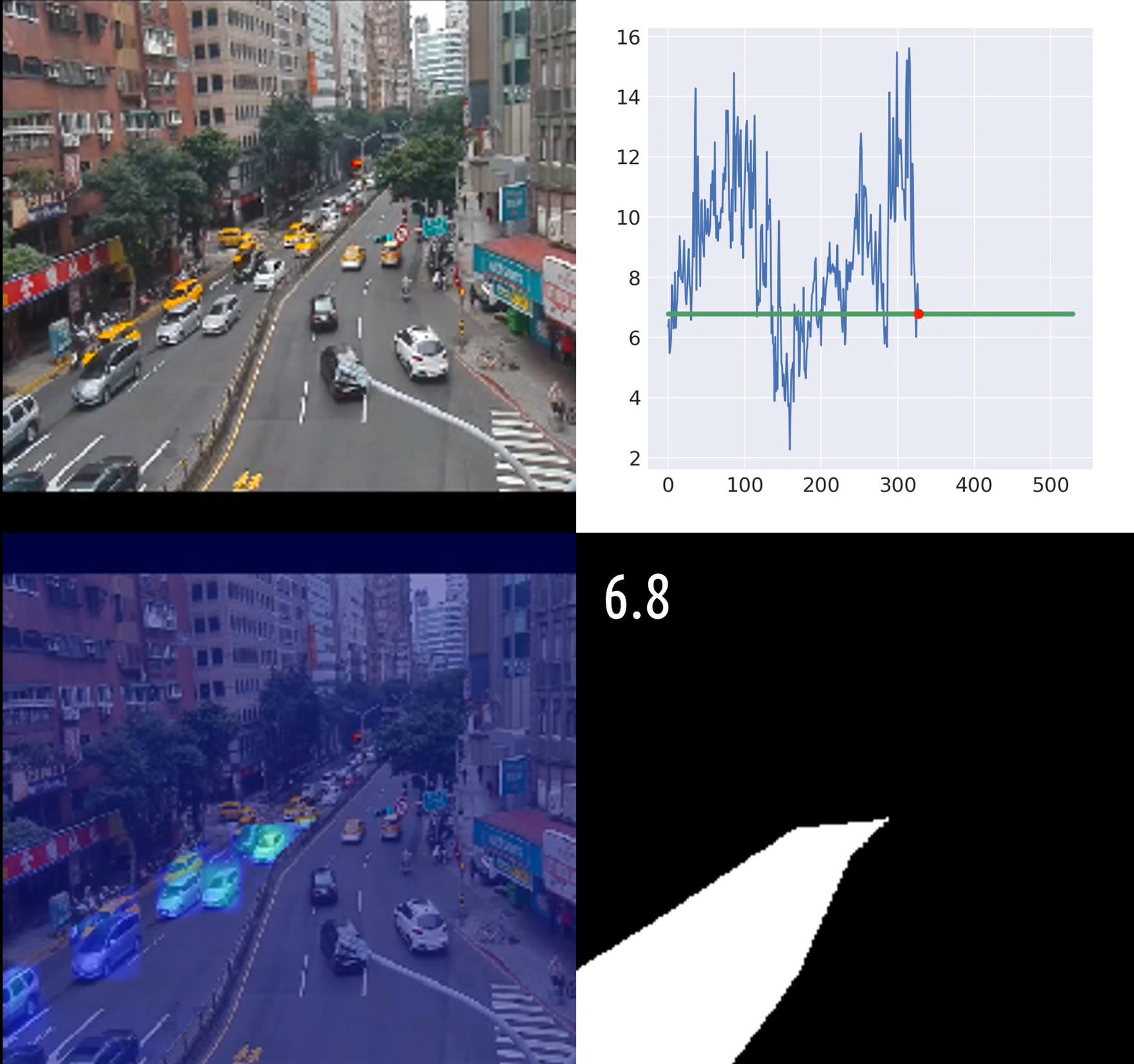

Taipei Traffic Density Network for Traffic Analysis

In this project, we built an FCN-style neural network, Taipei Traffic Density Network (TTDN), for analyzing urban traffic. The network generates density maps of vihecle counts from road-side cameras which are usually low-frame-rate, low resolution and low contrast. We use this network to estimates real-time traffic counts of Taipei City, Taiwan.

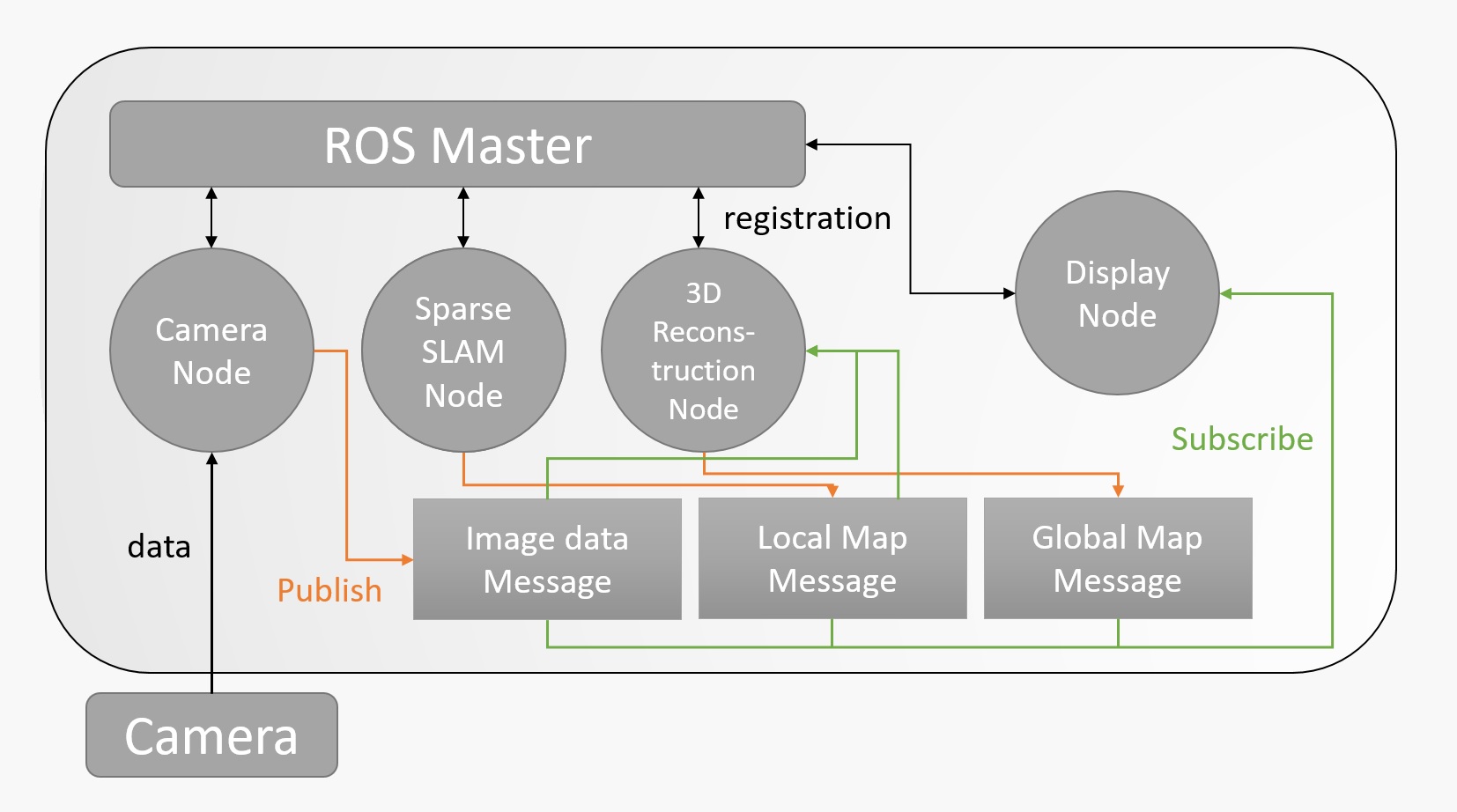

Bridge Inspection with Unmanned Aerial Vehicle

Bridge inspection is an important issue which usually takes lots of human effort to toil. We used SLAM and reinforcement learning to make the drone plan 3D routes and inspect the bridge automatically. The embedded system of our UAV is the Nvidia Jetson TX2, which can do both SLAM and RL-based planing in real time.

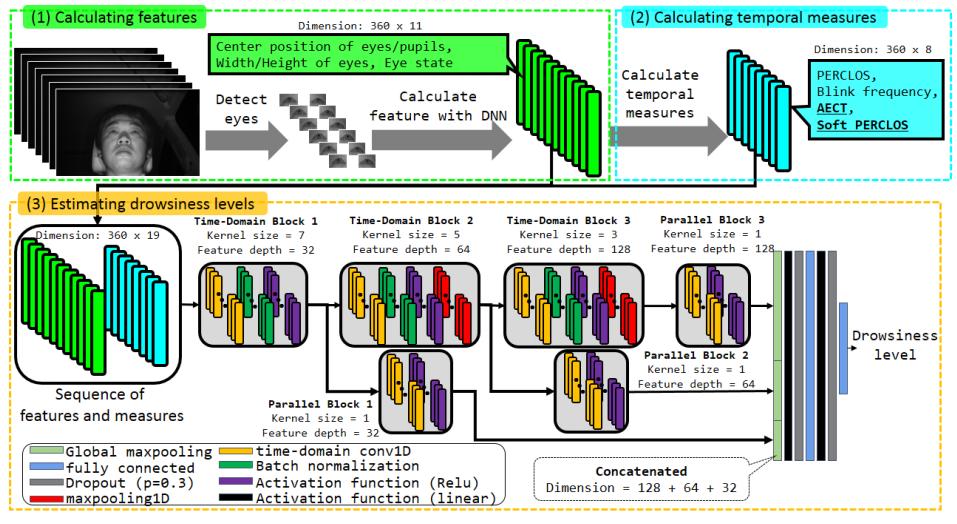

Driver Drowsiness Estimation from Facial Expressions

This project is a vision-based driver drowsiness estimation system from facial expressions. Our system can estimate drowsiness levels accurately, which can prevent drivers from fatigue driving in the early stage. Compared to previous approaches such as Electrogastrogram (EGG) or binary detection CNN models, this system is both user-friendly and accurate.

publications

Tight approximation for partial vertex cover with hard capacities

Published in The 28th International Symposium on Algorithms and Computation, Dec. 2017

We consider the partial vertex cover problem with hard capacity constraints on hypergraphs. In this paper we present a tight approximation for this problem. Our new ingredient of this work is a generalized analysis on the extreme points of the natural LP and a strengthened LP lower-bound obtained for the optimal solutions.

Download here

Tight approximation for partial vertex cover with hard capacities

Published in Theoretical Computer Science, Jan. 2019

We consider the partial vertex cover problem with hard capacity constraints on hypergraphs. In this paper we present a tight approximation for this problem. Our new ingredient of this work is a generalized analysis on the extreme points of the natural LP and a strengthened LP lower-bound obtained for the optimal solutions.

Download here

Driver Drowsiness Estimation by Parallel Linked Time-Domain CNN with Novel Temporal Measures on Eye States

Published in The 41th IEEE International Engineering in Medicine and Biology Conference, Jul. 2019

We presents a vision-based driver drowsiness estimation system from sequences of driver images. A stage-by-stage system instead of an end-to-end system is proposed for driver drowsiness estimation. Extensive experiments have been conducted on a driving video dataset recorded in real cars. Our system achieves a high accuracy of 95.86% and the MAE of 0.4007.

Download here

talks

Talk 1 on Relevant Topic in Your Field

Published:

This is a description of your talk, which is a markdown files that can be all markdown-ified like any other post. Yay markdown!

Conference Proceeding talk 3 on Relevant Topic in Your Field

Published:

This is a description of your conference proceedings talk, note the different field in type. You can put anything in this field.